Research

This list only includes a selection of our research.

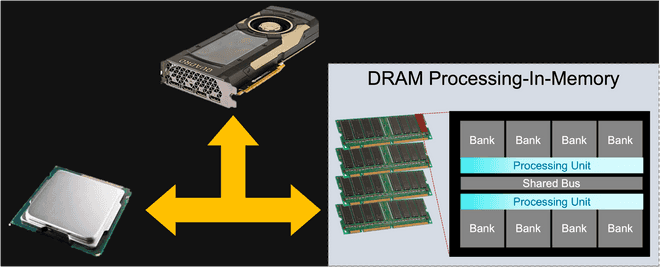

DRAM Architecture & Memory-system Research

DRAM has been an essential part of modern computer systems as the main memory for decades. While excellent DRAM architecture research has advanced this field so far, new problems regarding the DRAM reliability (e.g., In-DRAM ECC), security (e.g., Row Hammer), and capacity (e.g., cell refresh power/performance overheads) have recently emerged. Although many solutions have been proposed tackling these issues, they often incur additional overhead in DRAM delay time (latency). Also, more applications demand higher bandwidth between the processor and main memory for higher performance. Thus, we are pursuing researches on 1) new solutions for DRAM reliability, security, and capacity issues, 2) low-latency DRAM architectures which either directly improve the DRAM access latency parameters (e.g., tRCD, tRP, and tCL) or improve the related factors (e.g., refresh policies, DRAM page management policies), and 3) near/in-DRAM data processing solutions to achieve impressive performance improvements exploiting DRAM internal bandwidth.

Recent Papers: EuroSys 2025, ASPLOS 2025, ASPLOS 2024, ISCA 2024, HPCA 2024, MICRO 2023, CAL 2023, CAL 2023, TC 2023, CAL 2023, HPCA 2023, CAL 2022, TECS 2022, MICRO 2021, LCTES 2021, ASPLOS 2021, CAL 2021

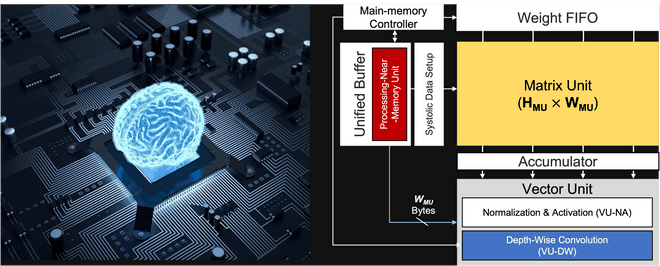

Hardware Accelerator Architecture for Deep Learning Applications

Deep Learning is widely utilized in computer vision, voice recognition, natural language processing, voice/signal processing, and recommendation system. It is one of the key technologies for artificial intelligence (AI) and is being developed rapidly. CNN (Convolutional Neural Networks), LSTM (Long Short-Term Memory Networks), and Transformer are the representative neural networks that are spotlighted nowadays. We pursue accelerator research that proposes faster and more energy-efficient architecture based on the deep understanding of the neural networks regarding their computation characteristics and data access patterns.

Recent Papers: MICRO 2024, ASPLOS 2024, CAL 2023, IISWC 2022, IISWC 2022, TC 2022, TODAES 2022, CAL 2022, MICRO 2021, CAL 2021, CAL 2021, TC 2021, SysML 2021

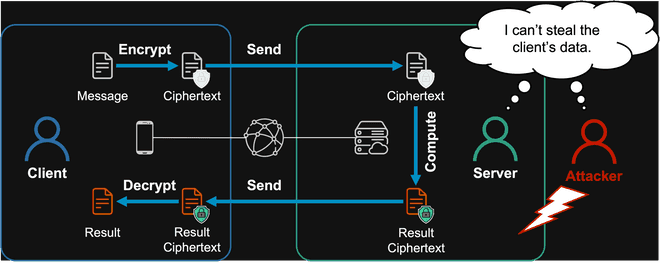

Investigation and Optimization for Fully Homomorphic Encryption Acceleration

Following the development of cloud computing, computations are executed in data centers more frequently than in personal devices. However, such a cloud computing system exposes additional vulnerabilities in security and privacy. To address this issue, homomorphic encryption (HE), which enables the computation between the encrypted values, is gaining its attention recently. Using HE, the cloud service provider can execute computation on the user data without the need for decryption. However, the key drawback of various fully homomorphic encryption (FHE) schemes (enabling an unlimited number of computations on encrypted data) is their high computational intensity due to the increased size of the encrypted data and computational complexity (over x10,000 increase for certain key operations compared to that on the unencrypted data). We pursue research on accelerating FHE to a practical level based on the skills and knowledge gained from our prior works in optimizing the other emerging applications. More specifically, we try to accelerate the commercialization of FHE by investigating the characteristics of multiple functions in FHE and accelerating them on CPUs and GPUs, which are two of the most widely used computing platforms with hundreds to thousands of ALUs.

Recent Papers: HPCA 2025, Access 2024, ISCA 2023, MICRO 2022, ISCA 2022, TCHES 2022, Access 2021